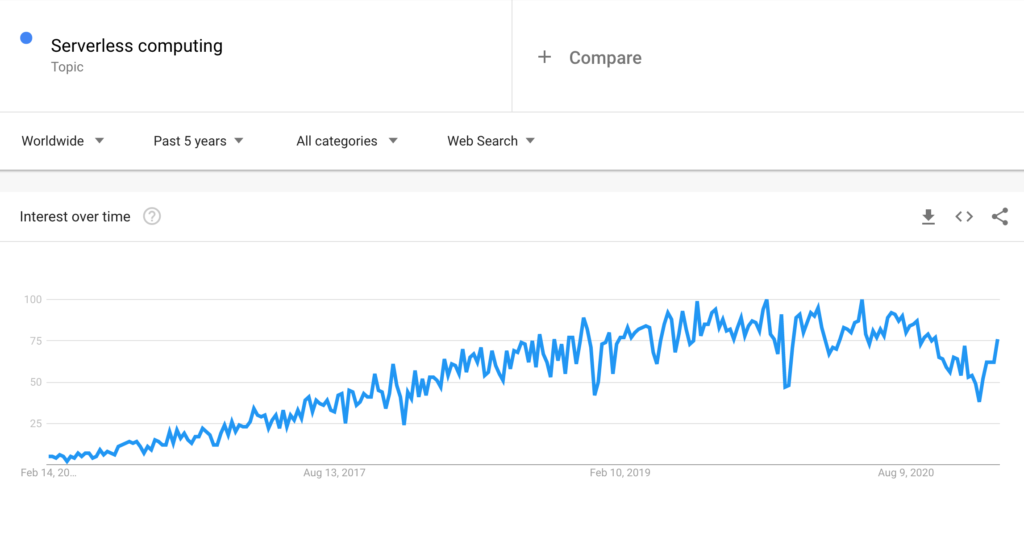

Serverless technologies have been seeing a meteoric rise in the past 5 years. With the launch of FaaS services such as AWS Lambda and Azure Functions, developers all over the world are shifting workloads away from dedicated server infrastructure and towards on-demand aka serverless computing.

In fact, check out this graph below charting the popularity of Serverless Computing over the past 10 years.

But is serverless taking over? There’s some debate over this question. A lot of that is due to the fact that the term ‘serverless’ is a bit overloaded with multiple meanings. But the thing is, serverless is a tool, and like any tool, there is a time and a place when it is useful, and others times when it is harmful.

In this article, I’m going to break down the different meanings of serverless, some of the pros and cons of using the technology, and whether or not its becoming the next big thing. I’ll be using a lot of examples referring to AWS Lambda since it is by far the most popular serverless compute option. So strap on your boots and let’s go.

What is Serverless?

Serverless generally refers to opaque compute infrastructure; that is, developers get compute functionality to host REST APIs or process data without having to worry about the actual infrastructure behind the scenes.

The plumbing of these serverless providers essentially operate by maintaining a massive pool of compute instances, and provisioning them on demand with the customer’s application code whenever requests come in. There’s pros and cons with this approach (discussed later) that make the ‘is serverless the next big thing?’ question worth discussing.

To demonstrate how easy it is to set up a serverless function, I can hop onto my AWS Account and set up a Lambda Function that will execute a piece of application logic at my discretion. This is dead simple, too, offering developers a compelling reason to give Lambda Functions a shot as a way to build REST apis or ad-hoc compute tasks.

Additional enhancements such as Docker for Lambda, and ECS Fargate to deploy containers show that companies like AWS are really investing in the future of Serverless technology.

The ‘Other’ Meaning of Serverless

Part of the confusion over serverless is that some folks group on demand computing options with managed services. This would include AWS services such as DynamoDB, a distributed NoSQL database that hides the complexity of services away from you. Other examples include SQS or SNS, two popular message orchestration platforms that microservices use to broadcast and consume events.

For the most part, when people say serverless, they are not referring to managed services, but instead referring to compute services such as Lambda or ECS Fargate where servers are not a thing, and developers generally only worry about their code and application logic.

I’m not going to dwell too much on what serverless isn’t, but I figured this section is important just entering the discussion and getting confused with its actual meaning.

The Pros of Using Serverless

Lets start out with the goodies and why serverless is a good thing.

1 – No Hardware, No Pain

If it wasn’t already apparent, one of the biggest headaches serverless solve for is that they free the developer from worrying about infrastructure. This includes things like selecting the right type of hardware, maintenance operations, security vulnerability patching, scaling, and many many more items.

Personally speaking, I’ve maintained many EC2 and ECS (non-fargate) based production services and the amount of time spent on ops to maintain hosts would shock you. And honestly, who wants to monitor hosts these days for CPU, Memory, Garbage Collection, and other metrics critical for host health evaluation – I certainly do not!

The lack of having to deal with hardware naturally leads us to our second big pro of using serverless: ease of use.

2 – Ease of Use

With a couple clicks, a developer can hop on their AWS account and create scalable REST api leveraging AWS Lambda. In fact, I have a video on how you can do this in less than 8 clicks. Check it out below.

It’s no shock that a big part of the reason developers love serverless so much is that its just so darn easy to get started and start delivering value.

In a fast moving business world, we need the ability to pivot quickly. Sometimes we create services that don’t work out, and other times we create services that flourish in the face of insatiable demand.

This is part of the reason serverless has found a permanent place in the heart of many developers, it offers the flexibility to build products quickly, while at the same simplifying operational overhead. Further, products such as Lambda offer an unbelievable able to scale to any workload, which is our next point of discussion.

3 – Autoscaling

If I were hosting a microservice using AWS EC2, how would I scale it so that it could handle workload spikes, while at the same time not burning a ridiculous amount of money? Well, you’d have to create a bunch of different AWS resources including autoscaling groups, target groups, then assign them to availability zones, before finally giving them a metric to watch in order to determine when to scale up or down. Impossible? Hardly. But honestly, who wants to deal with this unless you absolutely have to?

The simplicity of autoscaling is one of the most attractive features of AWS Lambda.

Lambda supports autoscaling functionality such that when a burst of requests comes in to your function, Lambda will automatically scale itself up so that it can handle the workload without any service compromise.

Autoscaling in Lambda is completely handled for you – its a feature that is always on and comes activated by default, supporting up to 1000 concurrent lambda function invocations at once before your function gets throttled. Note that the 1000 limit can be easily increased with a support ticket – its mostly there to prevent you from burning a hole in your wallet in case you make a mistake in your function (don’t ask me how I know this).

“The simplicity of autoscaling is one of the most attractive features of AWS Lambda”

Despite being practically infinitely scaleable, Lambda also offers mechanisms to minimize cost, which is our next discussion point.

4 – Cost Effectiveness

Keeping costs low, especially for companies with limited budget is important component of choosing the right compute option.

Lambda, and most if not all other serverless compute options use a ‘Pay for what you use’ pricing model.

The actual cost function is an composite of three inputs:

- Number of Invocations – Staying true to the ‘pay for what you use’ model, you only pay for the number of executions your Lambda function performs.

- Invocation Duration – The shorter the duration of your invocation, the lower you’ll pay, and vice versa. Keep in mind you’re capped out at 15 minute invocation. Billing occurs on millisecond intervals as of December 2020.

- Provisioned Memory – When creating a Lambda function, you assign an amount of memory you would like available for the invocation. Options range from 128mb (minimum) to up to 3gb (maximum). The higher the memory requested, the higher the cost.

There’s a specific formula AWS provides for calculating your costs. But to give you an idea as to whether you’ll achieve any cost savings, note that I’ve managed to save over 50% of my costs on a production workload by changing over from EC2 to Lambda (my manager was happy, to say the least!).

The other good news in the cost effectiveness category is that there exist numerous tools to help you pick the right memory settings for your function. This includes a tool built by AWS that was recently launched called Compute Optimizer. Other open source options include Lambda PowerTune made by Alex Casabloni which effectively performs a trial and error experiment to determine what the most optimal memory setting is for you.

Overall, using serverless options such as Lambda really helps drive down the cost of your applications with minimal drawbacks.

5 – AWS is Doubling Down on Serverless

In Andy Jassy’s re:Invent 2020 keynote, he discussed the growth of serverless computing and how its popularity has skyrocketed in recent years. He went on to discuss many of the improvements AWS is making in this domain to make developer’s lives easier.

One new feature is provisioned concurrency – the ability to keep your lambda function’s ‘always on’ so that there is always an instance ready and willing to receive traffic (as opposed to having to provision one on the fly).

Another notable one is RDS Proxy, a new feature that allows developers using Lambdas to be protected from browning out their RDS database due to too many concurrent Lambda function connections to the RDS instance.

With RDS Proxy, developers can now access their RDS instance through a connection pool manager where connections are maintained and re-used for subsequent requests – neat-o!

More features such as Lambda File systems give the ability for developers to mount a disk to their Lambda function with EFS help bridge the gap between serverless and provisioned infrastructure.

I fully expect next year’s re:Invent to contain more serverless announcements, and hopefully some even before then in the mid-year.

You may enjoy my article on AWS re:Invent 2020 – And Jassy Keynote Announcements Summary

Pros Summary

- No hardware means fewer setup time and less things to monitor and maintain.

- Setting up serverless functions is super simple.

- Lambda autoscaling handles bursty traffic for you – automatically and by default.

- Pay only for what you use – in my opinion the best pricing model out there.

- More and more features – AWS is doubling down and given the growth of Lambda/serverless, I don’t expect the feature parade to stop any time soon.

The Cons of Using Serverless

So far, I’ve glorified serverless quite a bit. I hope I didn’t make it seem like there’s no drawbacks with it, becasue after all, we can’t always have our cake and eat it too. So lets talk why serverless could be a bad thing.

1 – Cold Starts

One of the biggest problems with serverless is a concept called Cold Start.

Cold start is a side effect of ‘just in time computing’. You see, Lambda’s architecture will automatically scale your function down during periods of low or no traffic. And when I say down, I mean all the way down – like ZERO.

This means that during periods of inactivity, if a new request (or heaven forbid a burst of requests) come in, Lambda will have no instances with your code ready to serve the request. As a result, lambda will need to internally provision and launch new containers to field incoming traffic.

The result for your client is a pretty hefty delay for your initial calls. This is particularly a problem for applications that are cyclical in nature (activity during the day, no activity at night).

Some languages fare better than others, with Java being the worst. With java (and especially if you have a lot of dependencies in your .jar), I’ve seen cold start durations of 1 minute.

With other languages such as python or nodejs, this time can be greatly reduced but not fully eliminated unless other, more drastic and expensive measures are taken (ehem provisioned concurrency).

Developers can get around this phenomenon by utilizing a new Lambda feature called Provisioned Concurrency. Provisioned Concurrency does what you might expect, it maintains a pool of containers that are actively hosting your code and waiting for requests – even during times of low or zero traffic.

But wait – provisioned concurrency, doesn’t that sound a lot like non-serverless architecture? YES! And this my friend where we’ve come full circle back to hardware management, but without actually worrying about the hardware itself.

2 – Feature Restrictions

The truth about using serverless is that it can’t do everything a service with provisioned hardware can do.

One of the most common problems developers have is that Lambda function invocations have a hard limit of 15 minutes. If your invocation goes beyond this duration, it will automatically time out and throw an exception.

This isn’t a huge deal, but it can be a deal breaker for some folks looking to use Lambdas for heavy data processing. Honestly, this isn’t what lambda is meant for and I don’t mind Lambda putting this restriction in place for use cases that are clearly going against the grain of Lambda’s purpose.

3 – Too Much AWS ‘Stuff’

Another common complaint I hear a lot is regarding the number of Lambda functios you need to create to get the equivalent of a traditional REST api hosted on an nginx box.

This is certainly true – Lambda functions independently require setup, configuration and monitoring. In contrast, dedicated services hosted on provisioned hardware allow you to perform setup once, and expand it (i.e. new APIs) as the need arises.

With features such as AWS CloudFormation (AWS’ Infrastructure as Code product), this becomes less of an issue, but its still an issue that can be frustrating to deal with. As a hint, try to use AWS’ tagging feature to organize your resources.

The truth of the matter is that these complaints are warranted. Trying to build a full fledged microservice on AWS will require you to generate a lot of AWS ‘stuff’ such as IAM roles, Secret Keys, Cloudwatch Dashboards, Logging Groups, Metric Filters, and many more items.

You may enjoy my article on AWS CloudFormation: Concepts, Workflows, and a Hands on Tutorial

Cons Summary

- Cold Starts can cause high latency for initial requests after times of low activity. Although this can be solved using Provisioned Concurrency, it is expensive!

- Lambda places restrictions on you such as the duration of your invocations, and the amount of memory you can provision per invocation.

- Using Lambdas can sometimes require you to generate a lot of other AWS ‘stuff’ which may become difficult to manage and track.

So, When Should You Use Lambdas, and When Should You Not?

Ah, the age old question – when should I use this thing?

Unfortunately this difficult has gotten a bit tricky to answer, especially as Lambda/Serverless has released new features to address some of the shortcomings that were brought to light. However, I’ll give you some general guidance on when I think Lambda is a good chocie, and when it isn’t.

It’s a good choice when…

- Event processing – Lambda thrives in event processing scenarios such as polling and processing messages from an SQS queue. Paying only for what you use mean that youre not burning your $$$ in a pit when there’s no messages to be processed.

- Bursty workloads – Lambda is great for bursty workloads followed by periods of inactivity. As long as you can tolerate some high latency for your initial calls, then Lambda is a good option for this use case.

It’s a bad choice when…

- Sustained Workloads – If you have a use case where you have a sustained level of workload, whether it be event processing, API requests, or something else, you most definitely don’t want to use Lambda. You’ll save money by going with provisioned hardware.

- You’re Latency Sensitive – Due to how Lambda works (just in time computing), there is always a possibility that an invocation will result in a new container needing to be spun up, thus generating additional latency overhead. Unless you’re willing to pay for provisioned concurrency to mitigate this problem, Lambda may not be for you.

Closing Thoughts

In this article we talked a lot about what Lambda is, isn’t, and why its both good and bad. Like anything, lambda/serverless is a tool that is suitable for certain use cases, but not suitable for others.

Some may have you think serverless is going to completely take over and we’ll never deal with infrastructure again. I laugh at this notion – honestly there is always going to be a time where dedicated hosting is valuable. Its up to you to make an informed decision and pick the right tool for the job.

If you liked this kind of content, check out my YouTube channel over at www.youtube.com/c/beabetterdev !