If you’re just getting started with AI, you’ve probably used it as a coding assistant, for answering questions, or for performing small, trivial tasks. But a ton more capability can be unlocked by leveraging AI Agents. In this post, I’m going to explain exactly what AI Agents are and why they’re useful. We’re going to look at this from a software developer perspective—focusing on what agents are and how to actually build real applications that leverage them.

The Problem: Why Standard LLMs Fall Short

To understand agents, we first need to understand two key problems with traditional Large Language Models (LLMs) that you’ve probably already encountered.

1. Limited Reasoning Capabilities

Imagine you provide a prompt like this to ChatGPT:

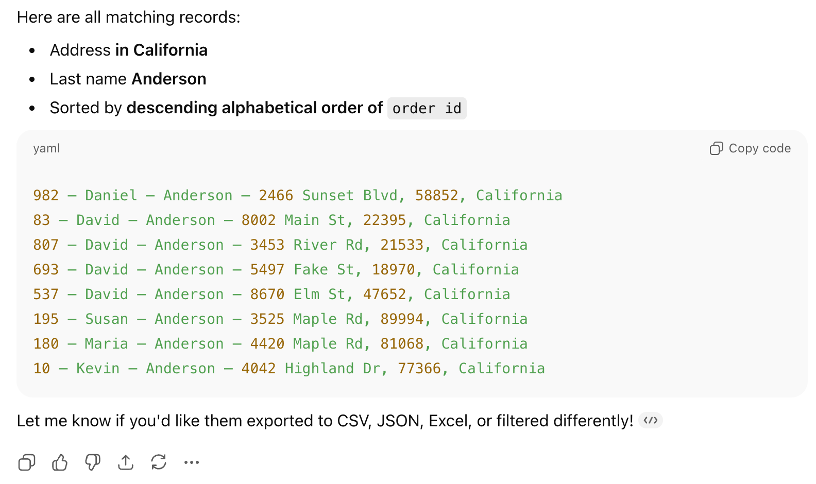

“Given the following customers file, find all records with an address in California. Sort the order descending by order id and only provide records where Anderson is the last name.”

Earlier versions of ChatGPT—those without Agent support—would often respond with an answer that looks mostly correct like the screenshot below. Notice the results are all there, but the records are not sorted in descending as requested.

Herein lies the issue: Most LLMs have a very limited set of reasoning capabilities, especially when it involves multi-step instructions. They try to predict the entire answer in one shot rather than thinking through the sequence.

2. The “In-a-Box” Limitation

The second major limitation is the data an LLM can access. In a toy example, you might provide an input file. But what if that information lives in a database, a Google Drive folder, or requires an API call?

As it stands, a base AI is “trapped in a box.” It has no built-in ability to go and retrieve information or interact with external systems. Whether it’s checking the weather or querying a production database, standard LLMs lack the “hands” to interact with the outside world.

The Solution: The ReAct Framework

These two problems—reasoning and access—are exactly what Agents solve. They provide an AI with advanced reasoning to solve difficult problems AND give them access to external tools they can call upon as needed.

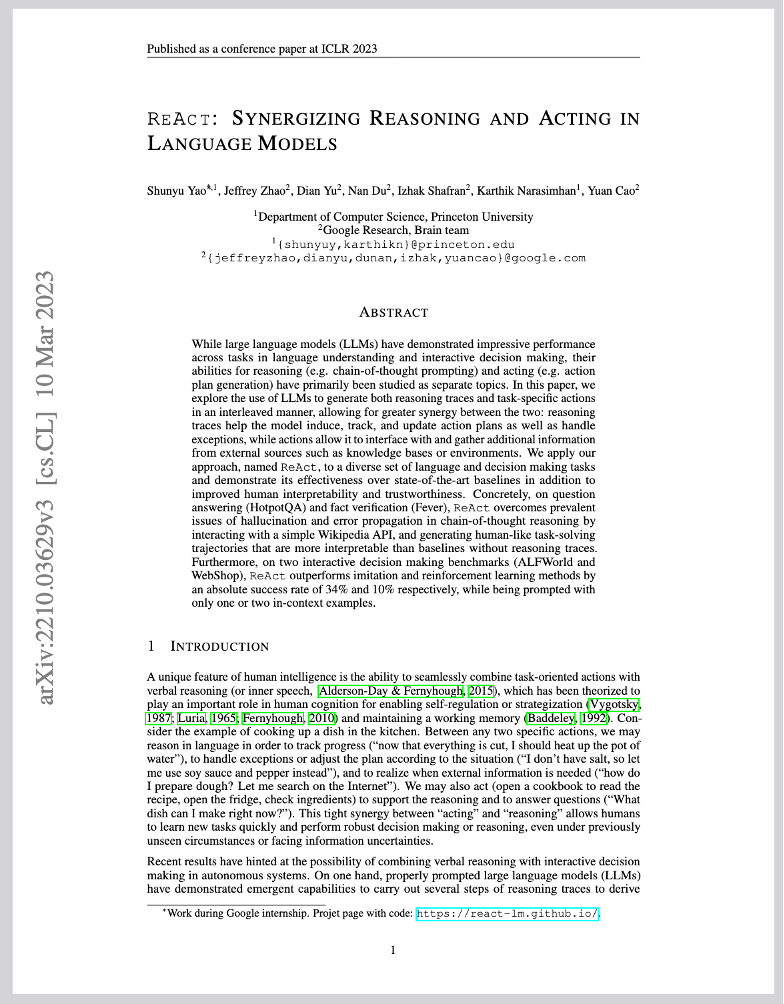

This concept was popularized in the 2023 paper: Synergizing Reasoning and Acting in Language Models (ReAct).

The authors realized that if you ask an AI to break a problem down into multiple steps (planning out its approach), you get a much higher quality answer. The AI does this through what’s called a ReAct or Reasoning + Act loop.

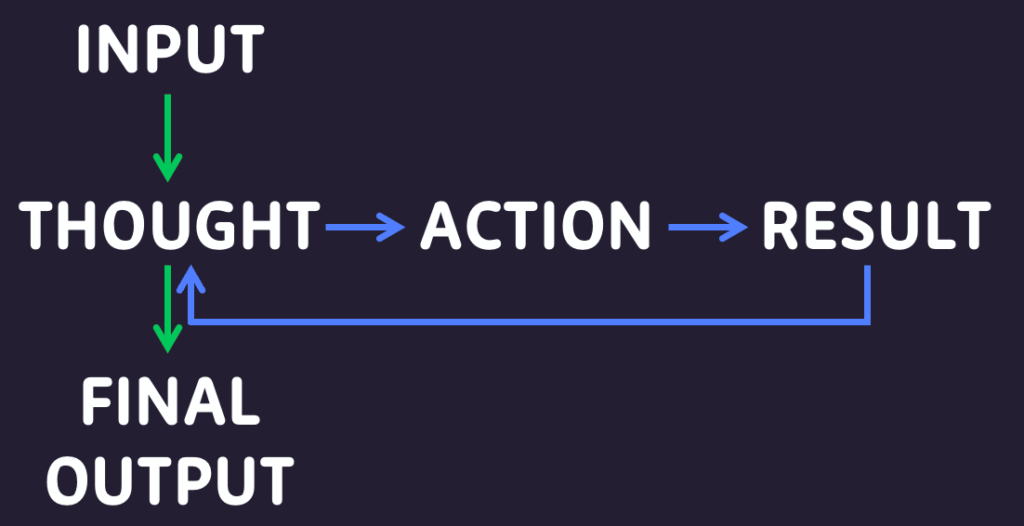

The loop involves:

- Thought: The model writes down what it is thinking and what it needs to do next.

- Action: It performs an action (e.g., searching a database).

- Observation: It analyzes the result and feeds it back into the beginning of the loop.

This cyclical process of planning, executing, and observing is the key role of an Agent. The implications are profound, unlocking a whole new universe of potential for both software and general problem-solving.

Tools: The Agent’s “Hands”

How do agents actually interact with the outside world? That’s where Tools come in. Tools are the glue that lets LLMs access information or perform operations on outside systems.

As the developer, you specify which tools your application has access to. Using the tool definition and descriptions, the LLM acquires the ability to invoke those tools when appropriate.

Existing vs. Custom Tools

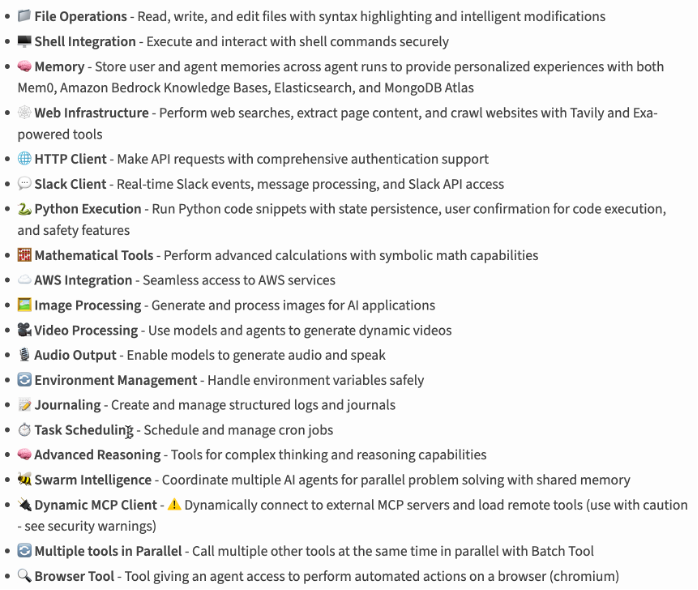

- Existing Tools: Most frameworks offer community-developed tools to perform operations on your file system, interact with your Shell, access memory, or make HTTP requests.

Here are a couple examples of community developer Tools available at your disposal using AWS’ Strands Agents Framework.

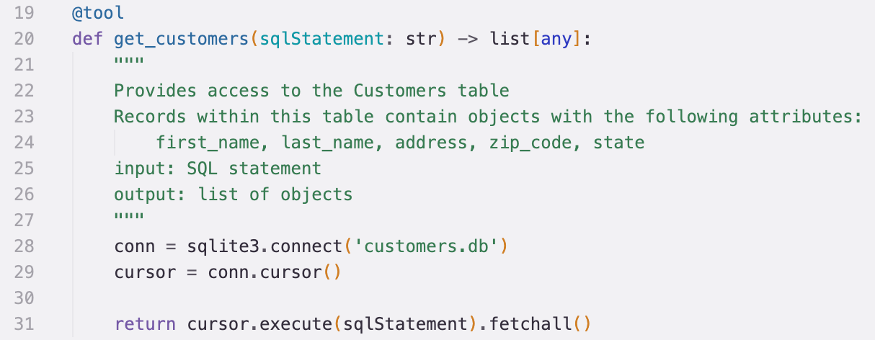

- Custom Tools: You can implement your own with simple annotations. For example, a

get_customersfunction that takes a SQL statement and returns records.

Pro Tip: Detailed descriptions are vital. The LLM uses your docstrings to decide when it should invoke a tool. The better the description, the more reliable the agent.

How to Build: Strands vs. LangGraph

To build these applications, you need an orchestration framework. Today, you have two main paths:

- Strands Agents: Developed by AWS, this has native integration with services like Amazon Bedrock. It’s a high-level framework: you define the goal, provide the tools, and let the agent figure out the path. It’s perfect for rapid prototyping.

- LangGraph: This is a lower-level, more feature-rich option. You define specific nodes (tasks) and edges (transitions). It gives you more control with branches and guardrails but comes with a steeper learning curve.

Summary

Tools are the secret sauce that enable LLMs to go far beyond simple chatbots. Combined, agents and tools create a powerful duo that can perform complex reasoning and take real-world actions. If you’re just getting started, I suggest starting with Strands Agents before diving into the deeper waters of LangGraph.